Gemma models share technical and infrastructure components with capable Gemini AI models. (Image – Google)

Google Gemma: An open source AI model for everyone?

- Google unveils Gemma, an open source AI model.

- Gemma models share technical and infrastructure components with capable Gemini AI models.

- Gemma will be available in two variants: Gemma 2B (2 billion parameters) and Gemma 7B (7 billion parameters).

Tech companies continue to give developers more options to develop AI use cases through open source AI models. Developers can access open source AI models to modify and contribute to various projects and tools. The goal of open source AI is to accelerate the development and innovation of AI technologies, as well as to ensure their transparency, accountability, and ethical use.

Today, open source AI can be used for various tasks and applications, such as content creation, email marketing, ad targeting, natural language processing, computer vision, robotics, and more. Open source AI can also help democratize AI by making it more accessible and affordable to everyone.

But open source AI also comes with some challenges and risks, such as security, privacy, quality, and governance issues. Therefore, it is important to use open source AI responsibly and ethically and to follow the best practices and guidelines of the community.

Some examples of open AI models are:

- OpenAI’s GPT-4 – a large multimodal model that can understand and generate natural language or code.

- OpenAI’s Sora – a text-to-video model that can create realistic and imaginative scenes from text instructions.

- Meta’s – PyTorch – a framework for developing and training deep learning models, with a focus on flexibility and dynamic computation graphs.

- Meta’s LLaMA 2 – a large language model that can generate natural language from text prompts, and is free for anyone to use.

Is Gemma really an open source AI model?

Google joins the open AI community with Gemma

Google Gemma is a family of lightweight, state-of-the-art open models built from the same research and technology used to create the Gemini models. According to Burak Gokturk, VP & GM of Cloud AI at Google, Google Cloud customers can start customizing and building with Gemma models in Vertex AI and running them on Google Kubernetes Engine (GKE).

Gemma will be available in two variants: Gemma 2B (2 billion parameters) and Gemma 7B (7 billion parameters). Each size is released with pre-trained and instruction-tuned variants. There is also a new Responsible Generative AI Toolkit that provides guidance and essential tools for creating safer AI applications with Gemma.

Other features include:

- Toolchains for inference and supervised fine-tuning (SFT) across all major frameworks: JAX, PyTorch, and TensorFlow through native Keras 3.0.

- Ready-to-use Colab and Kaggle notebooks, alongside integration with popular tools such as Hugging Face, MaxText, Nvidia NeMo and TensorRT-LLM, make it easy to get started with Gemma.

- Pre-trained and instruction-tuned Gemma models can run on your laptop, workstation, or Google Cloud with easy deployment on Vertex AI and Google Kubernetes Engine (GKE).

- Optimization across multiple AI hardware platforms ensures industry-leading performance, including Nvidia GPUsand Google Cloud TPUs.

- Terms of use permit responsible commercial usage and distribution for all organizations, regardless of size.

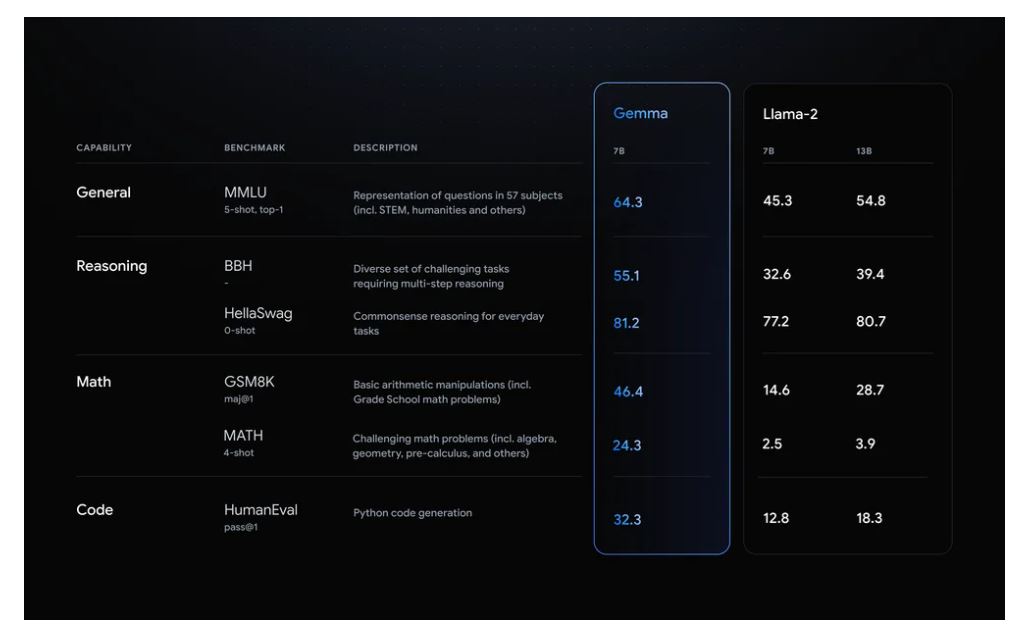

Google has also claimed that Gemma outperforms Meta’s LLaMA 2 on several benchmarks as demonstrated in the image below.

Comparison between Gemma and LLaMA 2. (Source – Google).

“By using Gemma models on Vertex AI, developers can take advantage of an end-to-end ML platform that makes tuning, managing, and monitoring models simple and intuitive. With Vertex AI, builders can reduce operational overhead and focus on creating bespoke versions of Gemma that are optimized for their use case,” said Gokturk.

For example, using Gemma models on Vertex AI, developers can:

- Build generative AI apps for lightweight tasks such as text generation, summarization, and Q&A

- Enable research and development using lightweight but customized models for exploration and experimentation

- Support real-time generative AI use cases that require low latency, such as streaming text

While Google hopes Gemma will be an open source AI model, there have been reports that Gemma stops being fully open source. As such, Google still may have a hand in setting terms of use and ownership.

A report by Reuters explained that some experts have said open source AI was ripe for abuse, while others have championed the approach for widening the set of people who can contribute to and benefit from the technology.

Google and Nvidia

In a blog post, Nvidia stated that teams from both companies worked closely together to accelerate the performance of Gemma with Nvidia TensorRT-LLM. The Nvidia TensorRT-LLM is an open-source library for optimizing large language model inference when running on Nvidia GPUs in the data center, in the cloud and on PCs with Nvidia RTX GPUs.

This allows developers to target the installed base of over 100 million Nvidia RTX GPUs available in high-performance AI PCs globally.

Nvidia said Gemma will be supported by Chat with RTX. Chat with RTX is an Nvidia tech demo that uses retrieval-augmented generation and TensorRT-LLM software to give users generative AI capabilities on their local, RTX-powered Windows PCs.

Chat with RTX lets users personalize a chatbot with their own data by easily connecting local files on a PC to a large language model. As the model runs locally, it will be able to provide fast results while also ensuring user data stays on the device.

READ MORE

- 3 Steps to Successfully Automate Copilot for Microsoft 365 Implementation

- Trustworthy AI – the Promise of Enterprise-Friendly Generative Machine Learning with Dell and NVIDIA

- Strategies for Democratizing GenAI

- The criticality of endpoint management in cybersecurity and operations

- Ethical AI: The renewed importance of safeguarding data and customer privacy in Generative AI applications