AWS becomes the first cloud provider to launch Nvidia GH200 superchips with NVLink for AI cloud infrastructure.

AWS becomes the first cloud provider to launch Nvidia GH200 Superchips with NVLink for AI cloud infrastructure

- AWS and Nvidia launch GH200 superchips, revolutionizing cloud AI.

- AWS-Nvidia’s expanded alliance brings groundbreaking EC2 instances and a top-tier AI supercomputer.

- The collaboration introduces advanced EC2 instances and AI software, enhancing cloud AI capabilities.

In cloud computing and artificial intelligence, the strategic collaboration between AWS and Nvidia has been a cornerstone of innovation for over a decade. Specializing in groundbreaking GPU-based solutions across domains such as AI/ML, graphics, gaming, and high-performance computing, this alliance has continually evolved to adapt to the latest technological advancements, extending its reach from the cloud, with Nvidia GPU-powered Amazon EC2 instances, to the edge, with services like AWS IoT Greengrass.

Evolving partnership: bridging cloud and AI with AWS and Nvidia

Building upon this rich history, AWS and Nvidia have recently announced a significant expansion of their strategic collaboration to deliver the most advanced infrastructure, software, and services to power generative AI innovations. This expanded collaboration will unite the best of both Nvidia and AWS technologies. It will incorporate Nvidia’s latest multi-node systems featuring next-generation GPUs, CPUs, and AI software, as well as AWS’s cutting-edge Nitro system for virtualization and security, the Elastic Fabric Adapter (EFA) interconnect, and UltraCluster scalability.

These technologies are ideally suited for training foundation models and constructing generative AI applications.

This latest development in the AWS-Nvidia partnership is an extension of their longstanding relationship and a leap into the future of generative AI. It builds on a foundation that has already fueled the generative AI era by providing early machine learning pioneers with the computing performance necessary to push the boundaries of these technologies.

In the expanded partnership to advance generative AI across various sectors:

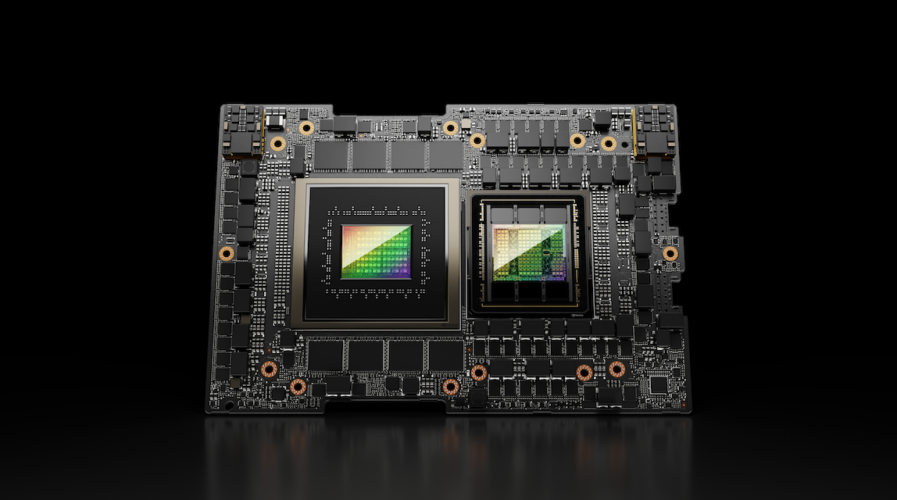

- AWS will be the first to offer Nvidia GH200 Grace Hopper superchips in the cloud, featuring the new multi-node NVLink technology. The GH200 NVL32 platform links 32 Grace Hopper superchips into a single instance, available on Amazon EC2 instances. This setup, supported by Amazon’s EFA networking, AWS Nitro system, and EC2 UltraClusters, allows customers to scale to thousands of GH200 superchips.

- Nvidia and AWS will launch Nvidia DGX Cloud on AWS, the first to utilize GH200 NVL32. This AI-training-as-a-service will provide massive shared memory, accelerating the training of advanced generative AI and large language models with over 1 trillion parameters.

- The companies are collaborating on Project Ceiba to create the world’s fastest GPU-powered AI supercomputer. Hosted on AWS with GH200 NVL32 and Amazon EFA, this 65 exaflop-capable system, featuring 16,384 GH200 superchips, will drive Nvidia’s generative AI innovations.

- AWS is introducing three new EC2 instances: P5e instances with Nvidia H200 Tensor Core GPUs for extensive generative AI and HPC workloads; G6 and G6e instances powered by Nvidia L4 and L40S GPUs, respectively, suitable for a range of applications including AI, graphics, video workloads, and 3D applications using Nvidia Omniverse.

Revolutionizing cloud AI: introducing the Nvidia GH200 superchips in AWS

These advancements build upon a collaborative history that began with the world’s first GPU cloud instance. Today, Nvidia and AWS provide the most extensive array of Nvidia GPU solutions for diverse workloads, encompassing graphics, gaming, high-performance computing, machine learning, and now, generative AI.

Reflecting on the impact of these innovations, Adam Selipsky, CEO of AWS, emphasized AWS’s ongoing efforts with Nvidia to make AWS the top platform for running advanced GPUs. This effort includes integrating next-generation Nvidia Grace Hopper superchips with AWS’s robust EFA networking, the hyperscale clustering capabilities of EC2 UltraClusters, and the advanced virtualization offered by the AWS Nitro system.

“Generative AI is transforming cloud workloads and putting accelerated computing at the foundation of diverse content generation,” said Jensen Huang, founder and CEO of Nvidia. “Driven by a common mission to deliver cost-effective state-of-the-art generative AI to every customer, Nvidia and AWS are collaborating across the entire computing stack, spanning AI infrastructure, acceleration libraries, foundation models, to generative AI services.”

AWS and Nvidia have taken significant strides in their ongoing quest to revolutionize generative AI by introducing new Amazon EC2 instances. These instances feature the cutting-edge Nvidia GH200 Grace Hopper superchips with multi-node NVLink technology, marking AWS as the first cloud provider to offer this advanced capability. Each GH200 superchip combines an Arm-based Grace CPU and an Nvidia Hopper architecture GPU, integrated into the same module.

Supercomputing meets cloud

A standout feature of a single Amazon EC2 instance equipped with GH200 NVL32 is its ability to provide up to 20 TB of shared memory, essential for powering terabyte-scale workloads.

These instances use the power of AWS’s third-generation Elastic Fabric Adapter (EFA) interconnect, providing each superchip with up to 400 Gbps of networking throughput. This high performance is crucial for scaling to thousands of GH200 superchips within EC2 UltraClusters, enabling customers to efficiently manage large-scale AI/ML workloads.

The GH200-powered EC2 instances will also feature 4.5 TB of HBM3e memory, significantly enhancing the current generation of H100-powered EC2 P5d instances. This improvement is not just in memory size but also training performance, thanks to a CPU-to-GPU memory interconnect that offers vastly superior bandwidth compared to PCIe.

These instances will be the first in AWS’s AI infrastructure to incorporate liquid cooling, ensuring optimal performance in densely packed server environments. They also benefit from the AWS Nitro system, enhancing I/O functions, performance, and security.

AWS and Nvidia partnership deepens with GPU clusters (Nitro enabled) (Source – X).

Under this expanded collaboration, AWS will also host Nvidia DGX Cloud powered by the GH200 NVL32 NVLink infrastructure. The Nvidia DGX Cloud is an AI supercomputing service that gives enterprises rapid access to multi-node supercomputing capabilities. This service is critical for training complex LLM and generative AI models, and is complemented by integrated Nvidia AI Enterprise software and direct access to Nvidia AI experts.

In parallel, AWS and Nvidia are working on the massive Project Ceiba supercomputer. Integrated with AWS services like Amazon VPC and Amazon Elastic Block Store, this supercomputer will play a pivotal role in Nvidia’s research and development across various fields, including LLMs, digital biology, and autonomous vehicles.

AWS is set to introduce new EC2 instances like P5e, G6, and G6e, each powered by Nvidia’s latest GPUs. The P5e instances, equipped with H200 GPUs, are tailored for large-scale generative AI and HPC tasks.

Meanwhile, the G6 and G6e instances, powered by Nvidia L4 and L40S GPUs, are designed for various applications, including AI fine-tuning, graphics, and video workloads. The G6e instances are suitable for developing complex 3D workflows and digital twins using Nvidia Omniverse. These instances reflect AWS and Nvidia’s commitment to providing cost-effective, energy-efficient solutions for various needs.

Nvidia’s software innovations on AWS

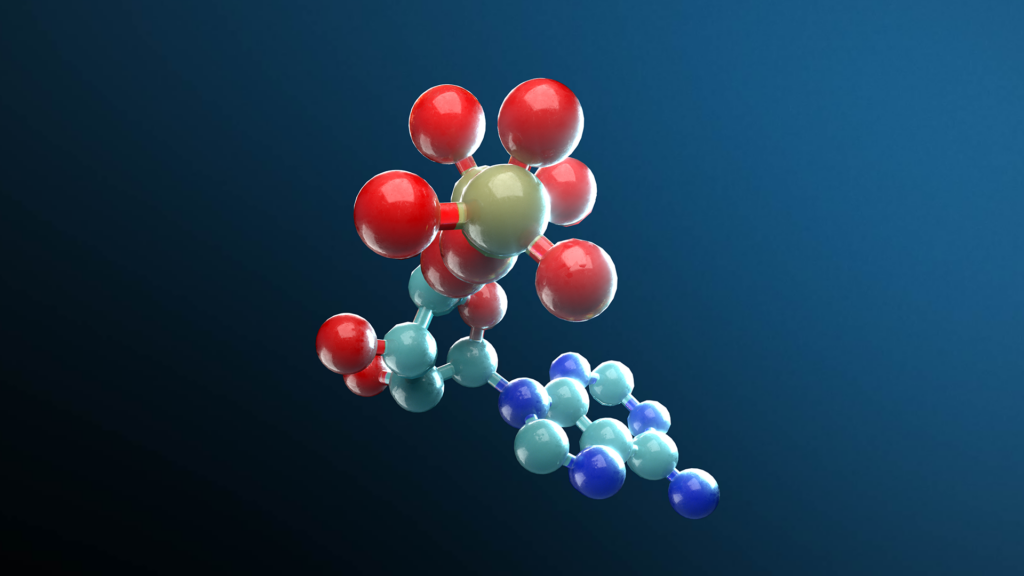

To complement these hardware advancements, Nvidia has also announced new software on AWS to bolster generative AI development. This includes the Nvidia NeMo Retriever microservice and BioNeMo, which are set to transform the creation of chatbots, summarization tools, and accelerate drug discovery.

Nvidia BioNeMo is set to transform the creation of chatbots, summarization tools, and accelerate drug discovery. (Source – Nvidia).

These software solutions are helping Amazon innovate its services and operations, as seen in Amazon Robotics’ use of Nvidia Omniverse Isaac for digital twin development and Amazon’s use of the Nvidia NeMo framework for training Amazon Titan LLMs.

Through these collaborative efforts, AWS and Nvidia are not only supercharging generative AI, HPC, design, and simulation capabilities but also democratizing access to state-of-the-art AI technologies for a broad range of companies and developers.

READ MORE

- 3 Steps to Successfully Automate Copilot for Microsoft 365 Implementation

- Trustworthy AI – the Promise of Enterprise-Friendly Generative Machine Learning with Dell and NVIDIA

- Strategies for Democratizing GenAI

- The criticality of endpoint management in cybersecurity and operations

- Ethical AI: The renewed importance of safeguarding data and customer privacy in Generative AI applications