Blending AI and open source to reshape innovation. (Source – Shutterstock).

GitHub’s vision on AI, open source, and the new frontier of innovation

- Mike Linksvayer, VP of developer policy at GitHub, highlights the significant impacts of AI and open source on productivity, innovation metrics, and data ethics.

- There is a need for balanced AI regulation and addresses AI-induced economic disparities.

- Regulation should be based on the area of deployment, rather than the technology itself.

The role of software development has constantly evolved throughout the history of computers, from the era of programming with punch cards to the present day. The productivity of software engineers has increased thousands of times over the decades, too.

As developers become more productive, they broaden the possibilities of what can be achieved with software. This increase in productivity makes business processes more efficient and accurate and drives innovation in specific applications, especially in areas like entertainment.

Tech Wire Asia spoke with Mike Linksvayer, VP of developer policy at GitHub, for insights into the evolving software development landscape and how policymakers should regulate commercial AI products and services instead of open source developers creating components that may be used in these systems. Linksvayer stressed that an organization should focus on equipping developers with the best tools, and enhancing their productivity and skill development through hands-on experience.

Mike Linksvayer, VP of developer policy at GitHub.

“Using advanced tools like Copilot not only boosts productivity within an organization but also increases the market value of developers as early adopters of new technology,” Linksvayer added. “Focusing on productivity is crucial for both the company and the individual, as it ultimately leads to new opportunities.”

The role of AI and open source in rethinking education and skills development

With AI transforming the job market, questions arise about shifts in educational focus to better prepare future generations. Linksvayer highlights the importance of a solid foundation in basic education, especially in this era of rapidly advancing technology. He considers essential skills like reading comprehension and critical thinking fundamental in this process.

Tools like GitHub Copilot serve as effective learning coaches, even if they were not originally designed for this purpose. But, a strong grasp of language is essential to fully benefit from these tools. As Thomas Dohmke, CEO of GitHub, emphasized in his keynote at GitHub Universe 2023, natural language is becoming the new interface for programmers, and lacking proficiency in it can be a significant disadvantage.

Thomas Dohmke, CEO of GitHub, presents the Day 1 keynote at GitHub Universe 2023. (Source – GitHub).

“In a world that is changing rapidly, mastering the basics is increasingly important,’ Linksvayer advised. ‘My recommendation to the government would be to focus on these fundamentals, ensuring that individuals are equipped with the necessary skills to adapt and continue learning.”

Challenges in measuring innovation

As we consider the expansive role of software development, another critical aspect emerges: the challenge of accurately measuring innovation in this dynamic field. Traditional innovation measurement has often relied on quantifiable elements, such as the number of patents and academic papers. However, these metrics can fail to capture the full spectrum of innovation, particularly the qualitative aspects of these outputs.

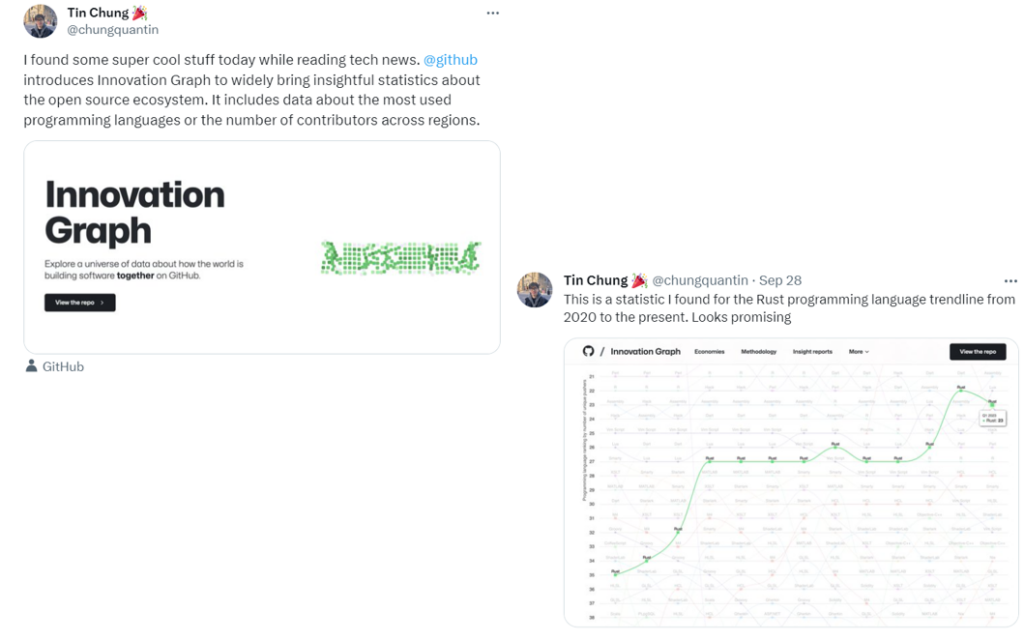

Linksvayer points out that the GitHub Innovation Graph recognizes the critical role of software in the innovation process. Software is not just a result of innovative efforts but also a catalyst for further innovation. Its impact is widespread, enhancing efficiency and capabilities in various fields, including academic research, through tools like Jupyter notebooks and AI integration.

The GitHub Innovation Graph is designed to integrate software development into traditional innovation metrics. Collaborations with entities like the World Intellectual Property Organization mark a shift towards a more inclusive approach to measuring innovation, acknowledging the significant role of software in this ecosystem.

An X user shows the use of GitHub Innovation Graph. (Source – X).

Ethical considerations in data usage

GitHub emphasizes ethical data practices, particularly concerning the Innovation Graph. The company focuses on privacy, publishing only aggregated data and setting thresholds that prevent the identification of individual activities. This approach demonstrates a cautious and responsible method of data handling.

“Our goal is to simplify the process for entities aiming to include software development in their innovation measurement, whether they are governments, companies devising strategies, or academics pursuing better measurement methods,” said Linksvayer. He expressed excitement about the potential for more comprehensive and accurate innovation metrics at various levels, underscoring GitHub’s commitment to this endeavor.

Navigating AI in commercial and open source contexts

Building on the theme of software’s evolution and its impact on various sectors, it’s also important to explore how AI is being navigated in both commercial and open-source environments, particularly under new regulatory landscapes.

Understanding the basics leads to the next step: grasping the policy aspects, a topic gaining attention with the EU AI Act. In this light, it’s crucial to consider the evolution of regulatory environments to effectively address AI’s unique challenges in both commercial and open source contexts. Linksvayer recommends regulating AI systems based on the risk associated with their applications, stressing that the focus should be on the domain of deployment, rather than the AI system itself.

Linksvayer explains that if an application is high-risk, regulatory requirements should align precisely with that domain. This approach is especially relevant in the EU, where regulation typically comes into play when products, often emerging from commercial transactions, enter the market.

In terms of regulation, open source presents both advantages and challenges. While open source models can be more complex to regulate due to their nature of not being easily ‘pulled from the market,’ their transparency is a significant advantage. Linksvayer believes that open source will drive much of the progress in building responsible AI and automation, including fairness tools and rapid iteration of open models, which can often be more effective than relying solely on APIs.

The potential of both open source and proprietary AI is substantial, and regulations must support the strengths of both approaches. Reflecting the software industry, which benefits from both open source and proprietary models, regulations like copyright and product liability should be designed to accommodate both, serving society’s interests effectively. These considerations are integral to long-term trends, highlighting the importance of regulatory frameworks evolving with technological advancements.

Confronting economic disparities in AI integration

An important issue that arises alongside these considerations is mitigating the risk of widening economic disparities due to AI integration in the workforce. This concern becomes increasingly relevant as we explore the impacts of open source and proprietary AI developments. Addressing this challenge is essential to ensure that AI advancements contribute positively to society and do not exacerbate existing inequalities.”

Linksvayer points out several key factors to consider. First and foremost is the importance of getting the basics right, which extends beyond AI to encompass fundamental principles. While opinions may vary on these basics, establishing a solid foundation in education and other elements of an inclusive economy is critical. Regardless of the level of technical advancement, including AI, these foundational elements are crucial for creating an environment that fosters inclusive economic growth.

Ensuring equal access to educational opportunities, social connections, and necessities like affordable housing significantly affects an individual’s capacity to benefit from and contribute to economic growth.

Regarding AI, Linksvayer advocates for a balanced and open-minded approach. Finding a middle ground between openness and preemptive regulation is vital.

Overly restrictive regulation can hinder competition and limit the introduction of new products, potentially confining benefits to a smaller group and inhibiting wider benefit distribution. Striking the right balance involves solidifying basic principles and then carefully navigating the rest, particularly with the rise of generative AI and the questions it raises about policy implications.

“Companies should focus on the productivity implications of AI,” Linksvayer suggests. “While there are many open policy questions, such as AI’s safety and its impact on jobs, I believe the productivity benefits of AI are overwhelming. GitHub is actively assisting policymakers in understanding these aspects to ensure AI’s impact benefits human progress.”

For companies, responsibly adopting AI should be a priority, which involves considering broader questions but focusing mainly on the productivity benefits. Following best practices in AI adoption is crucial and varies depending on the industry and business context. An example is GitHub Copilot, primarily a productivity tool. This contrasts with AI applications for more complex tasks, like classifying people, which require deeper consideration.

For most businesses, particularly those involved in software, utilizing tools like Copilot to enhance productivity is sensible, aiming to optimize the efficiency of software engineers.

READ MORE

- 3 Steps to Successfully Automate Copilot for Microsoft 365 Implementation

- Trustworthy AI – the Promise of Enterprise-Friendly Generative Machine Learning with Dell and NVIDIA

- Strategies for Democratizing GenAI

- The criticality of endpoint management in cybersecurity and operations

- Ethical AI: The renewed importance of safeguarding data and customer privacy in Generative AI applications