- AWS unveils Trainium2 and Graviton4 AI training chips at re:Invent 2023.

- Titan Image Generator, now in preview for AWS users on Bedrock.

- AWS Guardrails for Amazon Bedrock to help implement safeguards for AI development.

If generative AI has been a central theme at almost every tech conference this year, then AWS re:Invent 2023 is no exception. At this year’s Amazon Web Services re:Invent conference, generative AI claimed the spotlight. In his keynote, AWS CEO Adam Selipsky emphasized the company’s readiness to defend its leading position in the cloud market. Like most of its rivals, it is actively deploying AI tools and services.

Selipsky emphasized that AWS aims to assist organizations throughout the AI lifecycle with infrastructure, models, and applications. “If you’re building your models, AWS is relentlessly focused on everything you need: the best chips and most advanced virtualization, powerful petabyte-scale networking capabilities, hyperscale clustering, and the right tools to help you build,” he said during a keynote lasting nearly 2.5 hours.

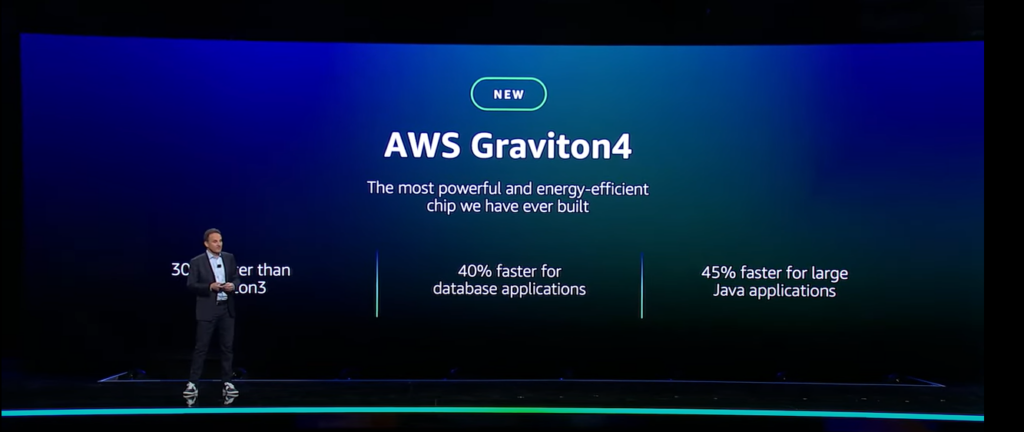

Trainium2 & Graviton4: AI model training chips

Considering that the growing demand for generative AI has led to a shortage of GPUs (Nvidia’s high-performance chips reportedly unavailable until 2024), tech giants are developing and offering custom chips for creating, iterating on, and deploying AI models to reduce dependence on GPUs. Amazon took the stage at the re:Invent conference this year to introduce its latest chip generation to alleviate that dependence on companies like AMD and Nvidia.

AWS Trainium2 aims to provide up to four times improved performance and two times better energy efficiency than its predecessor, Trainium, introduced in December 2020. Amazon plans to make it accessible in EC Trn2 instances, organized in clusters of 16 chips in the AWS cloud. Trainium2 is scalable and can reach up to 100,000 chip instances in AWS’ EC2 UltraCluster product.

AWS Trainium2 will power the highest performance compute on AWS for training foundation models faster and at a lower cost while using less energy. Source: AWS’s YouTube

Deployments at that kind of scale provide supercomputer-class performance with up to 65 exaflops of compute power. “Exaflops” and “teraflops” measure how many floating-point math operations a chip can perform per second. Large-scale deployments of Titanium2 enable customers to train large language models with 300 billion parameters in weeks rather than months, the company claims.

“Trainium2 chips are designed for high-performance training of models with trillions of parameters. [..] The cost-effective Trn2 instances aim to accelerate advances in generative AI by delivering high-scale ML training performance,” Amazon said in a release.

Amazon, did not specify the release date for Trainium2 to AWS customers, except for indicating they will be available “sometime next year.” The second chip the cloud giant introduced was Graviton4. “With each successive generation of chip, AWS delivers better price performance and energy efficiency, giving customers even more options—in addition to chip/instance combinations featuring the latest chips from third parties like AMD, Intel, and NVIDIA—to run virtually any application or workload on Amazon Elastic Compute Cloud (Amazon EC2),” Amazon said.

Graviton4 raises the bar on price performance and energy efficiency for a broad range of workloads, according to AWS. Source: AWS’ YouTube

Graviton4 marks the fourth generation the tech giant delivered in just five years and is “the most powerful and energy-efficient chip we have ever built for a broad range of workloads,” David Brown, vice president of Compute and Networking at AWS, said. According to a press release release, Graviton4 provides up to 30% better compute performance, 50% more cores, and 75% more memory bandwidth than current generation Graviton3 processors and is suitable for a broad range of workloads running on Amazon EC2.

Nvidia at AWS re:Invent 2023

Nvidia CEO Jensen Huang, making a surprise appearance at AWS CEO Adam Selipsky’s re:Invent conference keynote. Source: AWS’ YouTube.

AWS said it aims to distinguish itself as a cloud provider by offering a range of cost-effective options around AI. The strategy doesn’t solely rely on selling affordable Amazon-branded products; similar to its online retail marketplace, Amazon’s cloud platform will showcase premium products from other vendors, including much sought-after GPUs from Nvidia.

Following Microsoft’s unveiling of its inaugural AI chip, the Maia 100, and the integration of Nvidia H200 GPUs into the Azure cloud, Amazon made parallel announcements at the Reinvent conference. It disclosed plans to offer access to Nvidia’s latest H200 AI graphics processing units alongside its two new chips.

“AWS and Nvidia have collaborated for over 13 years, beginning with the world’s first GPU cloud instance. Today, we offer the widest range of Nvidia GPU solutions for workloads including graphics, gaming, high-performance computing, machine learning, and now, generative AI,” Selipsky said. “We continue to innovate with Nvidia to make AWS the best place to run GPUs, combining next-gen Nvidia Grace Hopper Superchips with AWS’s EFA powerful networking, EC2 UltraClusters’ hyper-scale clustering, and Nitro’s advanced virtualization capabilities.”

A detailed explanation of AWS and Nvidia’s collaboration can be found in this standalone article.

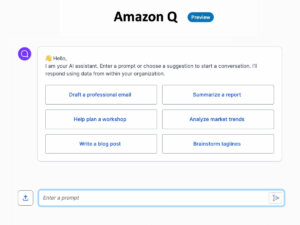

Amazon Q: a chatbot for businesses unveiled at AWS re:Invent 2023

Amazon Q can help you get fast, relevant answers to pressing questions, solve problems, generate content, and take actions using the data and expertise found in your company’s information repositories, code, and enterprise systems. Source: AWS

In the competitive landscape of AI assistants, Amazon has entered the fray with its offering, Amazon Q. Developed by the company’s cloud computing division, this workplace-focused chatbot is tailored for corporate, not consumer use. “We think Q has the potential to become a work companion for millions and millions of people in their work life,” Selipsky told The New York Times.

Amazon Q is designed to assist employees with their daily tasks, from summarizing strategy documents to handling internal support tickets and addressing queries related to company policies. Positioned in the corporate chatbot arena, it will contend with counterparts like Microsoft’s Copilot, Google’s Duet AI, and OpenAI’s ChatGPT Enterprise.

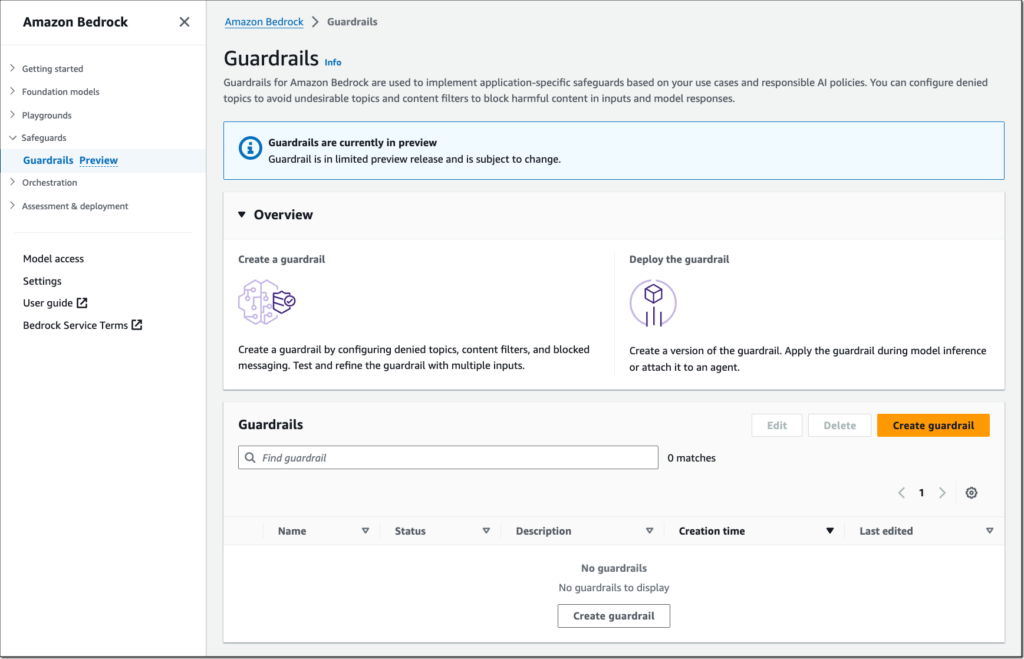

Titan Image Generator and Guardrails for Bedrock

Along with multiple others, Amazon is introducing its own image generator. Unveiled at AWS re:Invent 2023, the Titan Image Generator is now in preview on Bedrock for AWS users. As part of the Titan generative AI models, it can generate new images based on text or customize existing media.

“[You] can use the model to easily swap out an existing [image] background to a background of a rainforest [for example],” Swami Sivasubramanian, VP for data and machine learning services at AWS, said onstage. “[And you] can use the model to seamlessly swap out backgrounds to generate lifestyle images, all while retaining the image’s main subject and creating a few more options.”

AWS also unveiled Guardrails for Amazon Bedrock, enabling implementation of safeguards that ensure user experiences align with company policies. “These guardrails facilitate the definition of denied topics and content filters, removing undesirable content from interactions,” AWS noted in a blog post.

You can apply guardrails to all large language models (LLMs) in Amazon Bedrock, including fine-tuned models, and Agents for Amazon Bedrock. Source: AWS

Applied to all large language models in Amazon Bedrock, including models and Agents, guardrails promote “safe innovation” while managing user experiences. Standardizing safety and privacy controls, Guardrails for Amazon Bedrock supports creating generative AI applications aligned with responsible AI goals, AWS said.

Dashveenjit Kaur

| @DashveenjitK

Dashveen writes for Tech Wire Asia and TechHQ, providing research-based commentary on the exciting world of technology in business. Previously, she reported on the ground of Malaysia's fast-paced political arena and stock market.