Fintech is the leading sector for AI adoption and readiness in risk and compliance. (Image generated by AI).

Fintechs leading the change for AI adoption in risk and compliance

- Moody Analytics study shows fintech the leading sector for AI adoption and readiness in risk and compliance.

- APAC businesses adopt AI faster in risk and compliance compared to other departments.

- However, internal data quality remains a big challenge to using AI.

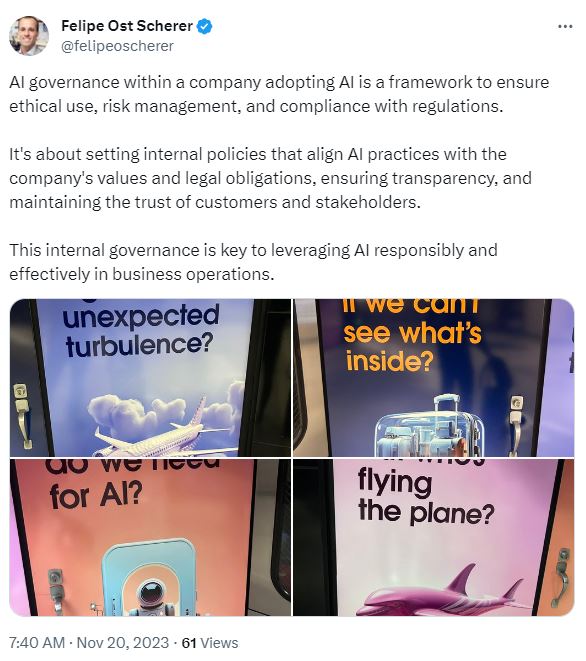

As the world looks to strengthen AI regulations, using AI in risk and compliance could be one way of ensuring the technology is not abused. AI adoption in risk and compliance may still be in its nascent stages, but the technology is improving, with more companies feeling the best way to ensure they meet regulations on technology is by using technology itself.

The financial services industry, which prioritizes risk and compliance most, is already using technology to do so. With AI, measuring risk and compliance is a lot faster. Fintech companies in particular can easily adopt this, given the amount of technology already involved in the industry.

In fact, in a recent study by Moody, fintech is the leading sector for AI adoption and readiness in risk and compliance, with 18% of fintech respondents currently actively using AI – double the percentage of respondents across all surveyed sectors (9%).

Sectors like insurance, asset management, and wealth management have been slower on AI uptake and adoption. Only 3% of respondents in those areas reported actively using AI currently, though another 11% are piloting it. Banking sits just behind fintech, with 12% of respondents actively employing AI.

“Compliance professionals are convinced that AI will be transformative for their industry, but obstacles remain that could hinder risk management and compliance functions from capitalizing on its potential. The benefits of AI are currently viewed in easy-to-measure quantitative terms.

“Process efficiencies are a good start to AI adoption, but they are only scratching the surface of the technology’s capabilities. Advanced data analytics, accurate predictions, and the scalability of data are all features compliance teams will not want to miss out on,” said Keith Berry, general manager for KYC Solutions at Moody’s Analytics.

Looking specifically at the use of AI for risk and compliance, compliance professionals are most likely to identify improved efficiency in processes (72%), increased speed of data processing and analysis (72%), and cost savings due to automation or improved decision-making (66%). Fewer currently recognize the potential for more advanced, transformative benefits, such as improved accuracy of results and predictions (51%) and the reduction of false positives (49%).

“With many of the professionals we spoke to expecting the widespread adoption of AI in the next one to five years, steps need to be taken for it to meet its transformative potential across risk management and compliance. For example, when based on high-quality data, AI is able to drastically reduce the number of false positives in a KYC screening process at scale and can result in up to 80% of level-one investigation and triage happening instantly and accurately. The overall outlook for AI is strong if compliance teams acquire the right expertise and data to fully capitalize on the opportunity,” explained Berry.

Can AI make a difference to ensuring risk and compliance?

AI adoption in risk and compliance in APAC

Moody Analytics’ study, Navigating the AI landscape: insights from compliance and risk management leaders, included a survey of more than 550 senior compliance and risk management professionals from 67 countries to assess their perspectives on and uses of AI.

Most of the respondents from Asia Pacific (APAC) think they will adopt AI faster in risk and compliance compared to other departments (30% compared to 18% in Europe and 16% in the Americas). That’s actually not that surprising given how countries like Singapore are promoting the use of AI by organizations.

The APAC region is also most keen for vendors to integrate AI tools, at 90% vs 77% in Europe and 68% in the Americas. 90% of APAC respondents also consider it fairly or very important to have new regulations on AI. However, at least 30% of APAC is most concerned about the displacement of jobs, compared to 13% in Europe and 14% in the Americas.

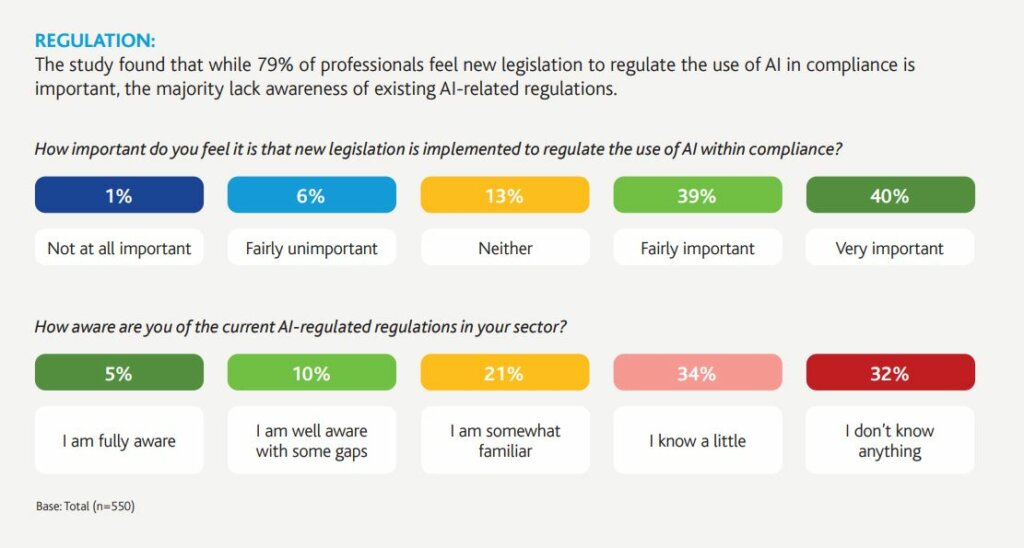

The study found that while 79% of professionals feel new legislation to regulate the use of AI in compliance is

important, the majority lack awareness of existing AI-related regulations. (Source – Moody Analytics).

Beyond the early adopters of AI

Another highlight from the study is that outside of the early adopters of AI, most firms have yet to embrace the use of large language models (LLMs). However, there is broad agreement that AI technologies, including GenAI, will deliver advantages for risk and compliance.

Despite the rapid growth of LLMs, caution remains in risk and compliance. Only 28% take a positive stance on these models, while 25% are actively discouraging or prohibiting their use and 46% have yet to adopt an LLM policy. Just 41% associate LLM terminology with risk and compliance.

One of the reasons for this could be the challenges of their internal data quality. Only 14% of those surveyed rated their own data as high quality. Resolving data issues is critical to reducing LLM hallucinations and improving the accuracy of AI outputs. More than half of the respondents stated their data quality was either inconsistent (44%) or fragmented (22%).

Inconsistent data quality means the data is structured but contains inconsistencies. This requires manual cleansing and has limited breadth and depth. Fragmented data quality meant the data was unstructured and required significant cleansing for meaningful use. Only 2% of respondents actually have superior quality data infrastructure with real-time refinement. This allowed them to seamlessly integrate the data into decision-making.

READ MORE

- 3 Steps to Successfully Automate Copilot for Microsoft 365 Implementation

- Trustworthy AI – the Promise of Enterprise-Friendly Generative Machine Learning with Dell and NVIDIA

- Strategies for Democratizing GenAI

- The criticality of endpoint management in cybersecurity and operations

- Ethical AI: The renewed importance of safeguarding data and customer privacy in Generative AI applications