This handout picture taken and released by Nvidia shows Nvidia founder and chief Jensen Huang speaking during the Computex 2023 in Taipei on May 29, 2023. (Photo by Handout / NVIDIA / AFP)

The dawn of a new era: NVIDIA’s trillion dollar agenda with generative AI and 5G

- NVIDIA and SoftBank partner on a novel AI and 5G platform backed by the Grace Hopper Superchip.

- NVIDIA debuts fast Ethernet platform tailored for Hyperscale AI.

Brace for impact! The world stands on the cusp of a massive shift, a seismic transformation akin to what NVIDIA CEO Jensen Huang refers to as the ‘iPhone moment’ for AI. This groundbreaking change promises to connect the present with a drastically different future, thanks to two key game-changers: a revolution in computing architectures and unprecedented breakthroughs in deep learning.

The computing architecture landscape has transitioned from a CPU-centric model to accelerated compute, driven by CPU scaling limitations. Concurrently, deep learning has advanced significantly, with transformer engines enabling transformations across various modalities, all powered by accelerated compute.

What’s more exciting is that Nvidia briefly joined an elite club of U.S. companies sporting a US$1 trillion market value, as investors piled into the chipmaker that has quickly become one of the biggest winners of the AI boom. The stock’s value has tripled in less than eight months, reflecting the surge in interest in artificial intelligence following rapid advances in generative AI, which can engage in human-like conversation and craft everything from jokes to poetry.

Powering the future with generative AI

The burgeoning field of generative AI, backed by expansive AI data centers and NVIDIA’s comprehensive architectures, has shifted from proprietary 5G infrastructure towards virtualized solutions like vRAN. However, optimizing such infrastructures for accelerated workloads remains a challenge.

“NVIDIA is addressing this gap,” said Ronnie Vasishta, SVP, Telecom, NVIDIA. “We’ve melded these two trends to create the NVIDIA Accelerated Cloud, transforming 5G data centers into AI factories, and vice versa. The aim is to operate 5G and generative AI applications, training, and inference within the same data center without compromising the performance of the 5G Radio Access Network.”

NVIDIA is thus reshaping 5G infrastructure to accommodate the escalating demand for generative AI capabilities. The firm is transitioning from the costly, single-vendor, proprietary RAN infrastructure towards the more flexible system offered by the Open RAN consortium.

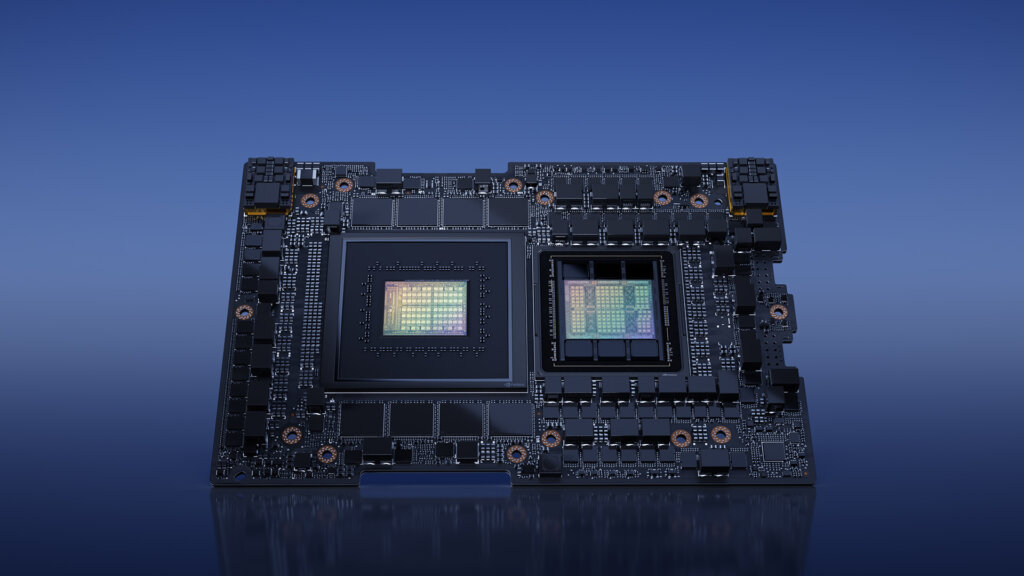

This transformation was showcased when NVIDIA and SoftBank Corp. announced their collaboration on a trailblazing platform for generative AI and 5G/6G applications at COMPUTEX. This platform, fueled by the NVIDIA GH200 Grace Hopper Superchip, is set to be deployed across new, distributed AI data centers in Japan.

NVIDIA GH200 Grace Hopper Superchip (Source – NVIDIA)

NVIDIA powering up SoftBank’s next gen data centers with AI

At COMPUTEX, Huang revealed new systems, partners, and additional GH200 Grace Hopper Superchip details. This Superchip amalgamates the Arm-based NVIDIA Grace CPU and Hopper GPU architectures, offering unparalleled computing capability for generative AI and HPC applications.

SoftBank plans to establish data centers to host generative AI and wireless applications on a shared server platform. This platform will utilize NVIDIA’s new MGX reference architecture, which is expected to boost performance, scalability, and resource utilization.

The MGX server specification delivers a modular reference architecture, enabling efficient construction of server variations for a wide array of AI and computing applications. Key manufacturers, including ASRock Rack, ASUS, GIGABYTE, Pegatron, QCT, and Supermicro, plan to adopt MGX to expedite the development and cut costs.

“As society moves towards coexistence with AI, data processing and energy requirements will surge. SoftBank is poised to provide next-generation social infrastructure to support Japan’s burgeoning digital society,” Junichi Miyakawa, CEO of SoftBank Corp, said. “Our collaboration with NVIDIA will amplify performance and curtail energy consumption.”

SoftBank and NVIDIA’s collaboration aims to enhance their infrastructure significantly with AI utilization, optimizing RAN. This partnership is also expected to aid in reducing energy consumption and establishing a network of interconnected data centers to share resources and host various generative AI applications.

“Demand for accelerated computing and generative AI is fueling a fundamental shift in data center architecture,” Huang stated. “The NVIDIA Grace Hopper is a revolutionary computing platform, designed to process and scale out generative AI services. SoftBank, like in their past visionary initiatives, is paving the way globally to establish a telecom network designed to host generative AI services.”

Society coexisting with AI: SoftBank’s commitment with NVIDIA

SoftBank’s upcoming data centers, more evenly distributed, will handle both AI and 5G workloads. This setup will enable operations at peak capacity with reduced latency and significantly lower energy costs. Moreover, SoftBank is exploring creating 5G applications in fields such as autonomous driving, AI factories, augmented and virtual reality, computer vision, and digital twins.

The forthcoming data centers of SoftBank are set to forge a progressive platform for 5G applications and generative AI. The more balanced distribution of these centers allows for optimal capacity operation, minimal latency, and a considerable decrease in energy costs. Furthermore, the design ensures simultaneous handling of AI and 5G workloads, an essential feature for future technological advancements.

In anticipation of future developments, SoftBank is investigating the potential of creating 5G applications across various sectors and use-cases. These areas of exploration include autonomous driving, AI factories, augmented and virtual reality, computer vision, and digital twins. By integrating NVIDIA’s innovative technology with SoftBank’s vision, the partnership aspires to unlock new possibilities in these areas.

The collaboration between NVIDIA and SoftBank signifies a massive leap in the AI and telecommunications industries. Their combined efforts shape a future where generative AI and 5G/6G coexist and prosper, leading to extraordinary technological and societal advancements. This partnership sets the stage for an exciting, interconnected future where AI is integrated into every aspect of life and network capabilities align with the demands of an increasingly digitalized society.

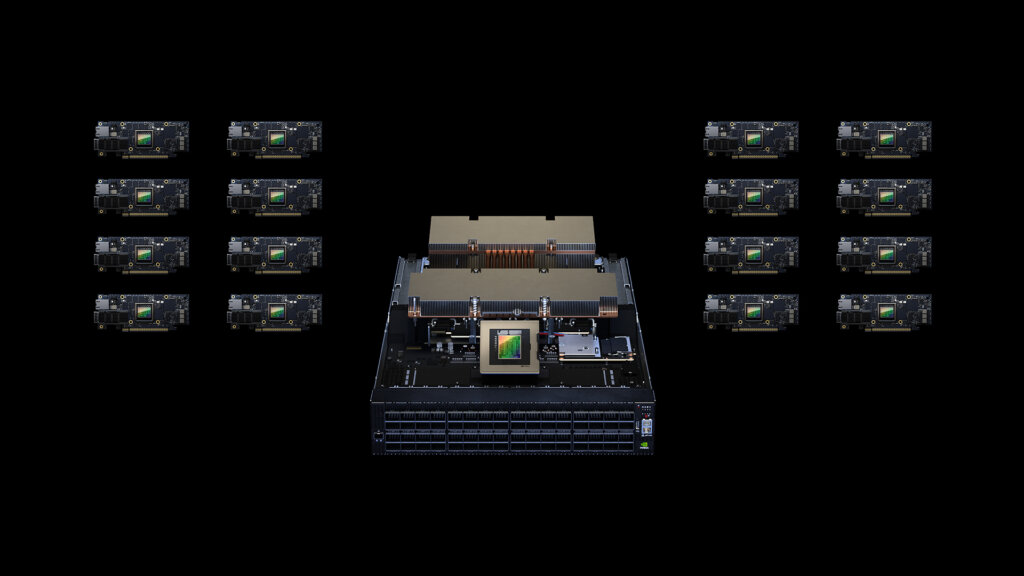

NVIDIA Spectrum-X: Supercharging ethernet-based AI clouds

Recently, NVIDIA launched NVIDIA Spectrum-X, a groundbreaking networking platform engineered to boost performance and efficiency in Ethernet-based AI clouds.

NVIDIA Spectrum-X (Source – NVIDIA)

Built around networking advancements powered by the union of NVIDIA Spectrum-4 Ethernet switch and NVIDIA BlueField-3 DPU, Spectrum-X promises 1.7x superior AI performance and power efficiency, alongside consistent and predictable performance in multi-tenant environments. Enhanced by NVIDIA’s acceleration software and SDKs, Spectrum-X equips developers to create software-defined, AI applications native to the cloud.

By providing comprehensive capabilities, Spectrum-X decreases run-times for extensive transformer-based generative AI models, enabling network engineers, AI data scientists, and cloud service providers to enhance results and make informed decisions faster.

Prominent cloud innovators and the world’s leading hyperscalers are already adopting NVIDIA Spectrum-X, demonstrating its potential in the field.

READ MORE

- 3 Steps to Successfully Automate Copilot for Microsoft 365 Implementation

- Trustworthy AI – the Promise of Enterprise-Friendly Generative Machine Learning with Dell and NVIDIA

- Strategies for Democratizing GenAI

- The criticality of endpoint management in cybersecurity and operations

- Ethical AI: The renewed importance of safeguarding data and customer privacy in Generative AI applications