Peter Marrs, Dell Technologies’ President for Asia Pacific & Japan, shared why APAC enterprises cannot put off harnessing generative AI any longer. (Photo by Brandon Bell / GETTY IMAGES NORTH AMERICA / Getty Images via AFP)

We asked Dell why enterprises can’t afford to hold back on adopting generative AI

- Peter Marrs, Dell Technologies’ president for Asia Pacific & Japan, explained why APAC enterprises cannot put off harnessing generative AI any longer.

- Marrs also iterated generative AI’s potential for enterprises and the benefits of gaining an early advantage.

- He talked through the crucial tech infrastructure in handling large sets of data securely.

Like the groundbreaking advances in personal computing four decades ago and server virtualization over two decades ago, Dell Technologies believes the current wave of generative AI is another transformative era with boundless potential. “We’re still in the early stages of the generative AI revolution, but it’s real. Generative AI is an inflection point. It’s disruptive. It’s game-changing. More to learn. More to come,” vice chairman and COO Jeff Clarke wrote in a blog post recently.

The excitement surrounding generative AI is understandable, especially in Asia, where we see a growing appetite for the technology among enterprises since the launch of OpenAI’s ChatGPT in late 2022. In fact, a report by International Data Corporation (IDC) shows that two-thirds of surveyed organizations in Asia Pacific & Japan are looking into investing in generative AI and technologies affiliated with it.

Like the data shared by IDC, most statistics on generative AI worldwide have been equally astounding. Pundits say other technology has never garnered the interest of corporate executives and the general public so rapidly and thoroughly. But that also means discussion around generative AI is often polarized, with enthusiasts and skeptics dominating the discourse, leaving little room for the middle ground.

This poses a significant challenge for business leaders who must make AI-related decisions grounded in factual information and strategic significance, rather than being swayed by overconfidence and exaggerated claims. Peter Marrs, president of Dell Technologies’ Asia Pacific & Japan (APJ) region, spoke with Tech Wire Asia to share the compelling need for enterprises to embrace generative AI correctly.

This interview has been edited for length and clarity.

TWA: How should enterprises approach generative AI now?

What technology enables is incredible, and the stakes for IT and its impact on society has never been higher, placing a spotlight on what is needed to unlock actual value and do so ethically. Enterprises should prioritize a deep understanding of how generative AI works and the specific use cases to which it can be applied in their businesses. Beyond understanding the infrastructure required for it, companies should also look into issues of legal and ethical consideration as well as security and privacy to ensure their AI implementation serves them long-term.

Peter Marrs, president, Asia Pacific & Japan, Dell Technologies.

TWA: Why does Dell believe APAC enterprises cannot put off harnessing generative AI for long?

Enterprises must stay at the forefront of innovations, including generative AI, to meet consumers’ needs and expectations. In APAC, consumers have shown demand for personalized, seamless, and omnichannel experiences, with 94% of APAC consumers saying they are willing to spend more with companies that personalize the customer service experience.

One use of generative AI helps enterprises evolve to meet these needs by powering product recommendations and improved AI-driven chatbot customer service.

Generative AI can also play a critical role in protecting enterprises against emerging threats of cyberattacks and data breaches across our region. According to a Check Point Software Technologies report, the APAC witnessed the highest year-over-year increase in weekly cyberattacks during the first quarter of 2023, averaging 1,835 attacks per organization.

The need for cybersecurity and fraud management is a challenge where generative AI can be essential in predicting threats, enhancing threat intelligence, and enabling faster enterprise-wide detection and response to vulnerabilities. And last but certainly not least, AI has immense opportunities that will fundamentally shift work and make it more effective for humans.

As generative AI evolves, machines pick up more day-to-day tasks, freeing employees to focus on higher-value activities. Organizations should explore using AI to increase efficiency and focus their team members on high-priority work that better meets customers’ demands.

TWA: How can enterprises harness the first mover’s advantage in the race for GenAI? Does Dell AI help deliver that advantage?

To harness its full potential, leaders must understand the technology’s capabilities and potential applications specific to their business. AI projects, at their core, are about a decision to operate parts of your business differently, so all corporate functions need to be involved in AI projects.

They’ll also need to build internal expertise to prepare for the adoption of generative AI, finding the right talent that understands the model complexities and data requirements with a broad range of skills that blend scientific acumen with creative capability. Beyond hardware, enterprises should delve deep into regulatory compliance, ownership of content through IP and patents and consider ethical issues surrounding generative AI, including sustainability, to ensure that no harm is caused in the push for innovation.

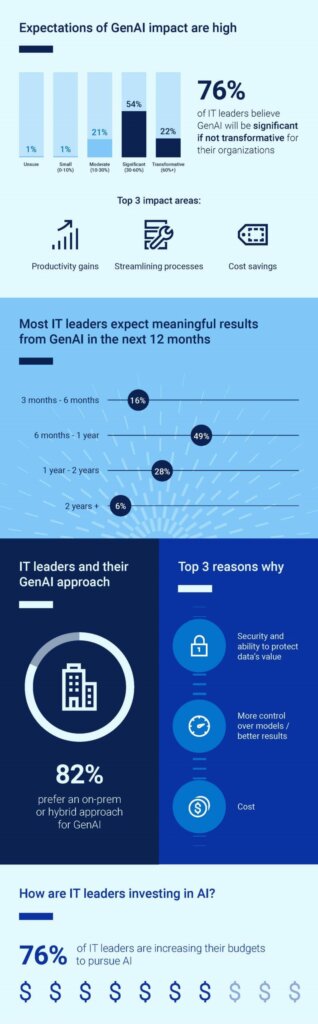

To better understand what’s limiting or stopping organizations from embracing these technologies, Dell Technologies has surveyed 500 IT Leaders from several countries to generate important insights around readiness and potential. Source: Dell.

Note – you should not always associate AI with complexity. Some reasonably easy first-mover paths are less risky but provide a valuable tool set. Lower-risk projects are worth doing because they provide incremental value and give businesses foundational expertise to build upon.

Working with trusted IT partners to align resources is the quickest way enterprises can kick start the process and enable the right strategic generative AI approach for their business. Engaging technology providers like Dell that offer AI expertise, hardware, and software in creating custom full-stack solutions can accelerate the deployment of generative AI in enterprises, unlocking the power of AI with support across the complete lifecycle, from strategy, implementation adoption to scaling of their strategy.

TWA: What foundations do enterprises need to start harnessing generative AI effectively?

To harness generative AI effectively and accelerate time to results, enterprises must strategically navigate technology options and integration challenges. This process hinges on establishing solid foundations, including:

- A robust data strategy: GenAI models thrive on data, and enterprises need a robust data strategy that involves collecting, cleaning, and curating high-quality data.

- Data privacy: GenAI can only be successfully deployed if data privacy and security are prioritized. Dell AI solutions simplify enterprise-level GenAI deployment with a tested combination of optimized hardware and software. This delivers the power to convert enterprise data into smarter, higher-value outcomes while maintaining data privacy.

- Invest in the necessary infrastructure: training and deploying GenAI models can be computationally intensive. Enterprises need to invest in infrastructure that can facilitate efficient training and inference processes, provide scalability for handling varying workloads, and address data protection and regulation challenges.

- Iterative development: GenAI models often require multiple iterations and updates to improve performance. Enterprises need to implement a continuous development cycle, incorporating feedback from real-world usage, to refine and enhance the models over time.

TWA: For enterprises rushing to integrate generative AI tools with their internal and external workflows – what are some pitfalls to avoid when adopting this technology?

Data hygiene is critical since your ability to harness AI is only as good as the data feeding it. Generative AI workloads can be broadly categorized into training and inferencing. Training uses a large dataset of examples to train a model, while inferencing uses a trained model to generate new content based on input.

Generative AI models may require large amounts of training data to generate accurate results. To address this challenge, enterprises must ensure that their data is clean, precise, well-labeled, and representative of the problem they are trying to solve. When adopting the technology, organizations should also think carefully about computing power and how to support it through an IT infrastructure, whether in the internal data center, on the cloud, or via the edge. Executives who want to make the most of generative AI must create an ecosystem of trusted technology partners who help them bring in specialist capability as it’s demanded.

TWA: Are GenAI platforms a data governance risk for businesses?

Using generative AI in a business setting can pose various risks around accuracy, privacy, security, regulatory compliance, and intellectual property infringement. Generative AI models are refined based on input data provided by the company, and if they do not use data that is authorized, or address privacy concerns adequately, it may result in a data breach or non-compliance with data security regulations.

However, with the proper security measures, enterprises can mitigate such risks. Some measures include ensuring encryption, managing access rights, implementing monitoring mechanisms, and establishing acceptable data-handling practices.

TWA: What are some of the technology architectures that can not only process larger datasets that come with GenAI but also do so securely?

GenAI models can be sizable, with many parameters and intermediate outputs. This volume means that the models require significant amounts of storage to hold all the data. It is expected that they’ll use distributed storage systems such as Hadoop or Spark to store the training data and intermediate outputs during training.

For inferencing, storing the model on a local disk might be possible, but for larger models, it might be necessary to use network-attached storage or cloud-based storage solutions. AI systems need scalability, high-capacity, and low-latency storage components for file objects and file storage.

TWA: Large language models are notably cost-ineffective to operate on a small scale. How realistic are models for businesses that run inside a “walled garden”?

There are two distinct variants of generative AI. The first is massive, multifunctional generalized models, with GPT-4 and ChatGPT as examples. The second is domain-specific ones, such as PubMed GPT or Dramatron, or enterprise-specific models, such as stable diffusion, Bloomberg GPT, and Code Gen.

The core neural network-based language model is essentially the same. However, the difference lies in the number of parameters and expert systems focused on unique functionality like common sense reasoning, translations, pattern recognition, reading comprehension, and code completion.

While massive multifunctional generalized models have many more parameters and expert systems are trained on a broad and large volume of data and may be more practical, the same is not true for domain and enterprise-specific generative AI.

Domain and enterprise-specific generative AI use smaller proprietary data sets with fewer parameters and expert systems. These models will make more sense for businesses that operate inside a “walled garden” as they do not require a massive scale or cost, and in most cases, they can be trained up with a small cluster of servers with GPUs and perform inference on edge with a single server.

READ MORE

- 3 Steps to Successfully Automate Copilot for Microsoft 365 Implementation

- Trustworthy AI – the Promise of Enterprise-Friendly Generative Machine Learning with Dell and NVIDIA

- Strategies for Democratizing GenAI

- The criticality of endpoint management in cybersecurity and operations

- Ethical AI: The renewed importance of safeguarding data and customer privacy in Generative AI applications