Graviton3E: Here’s what you need to know about the new AWS chip unveiled at reInvent.Photo: Peter DeSantis, Senior Vice President of AWS Utility ComputingSource: AWS

Graviton3E: Here’s what you need to know about the new AWS chip unveiled at reInvent

- The new version of its custom Arm-based Graviton chips, unveiled at reInvent promises 35% better performance for workloads that heavily depend on vector instructions.

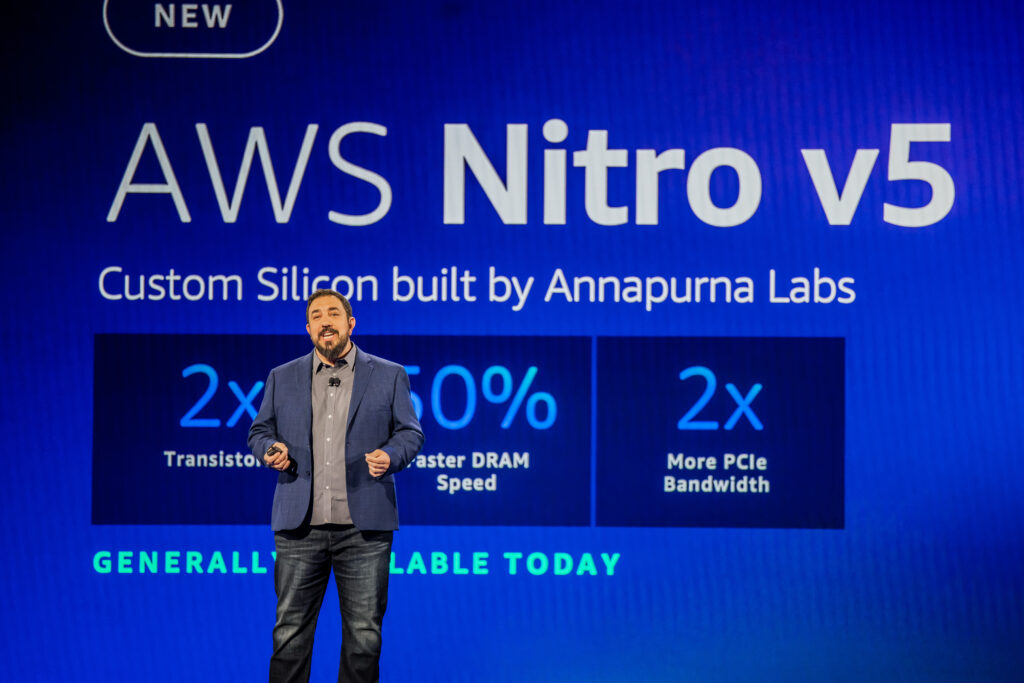

- AWS also unveiled a new version of its Nitro hypervisor and new instance types.

Four years ago, during AWS reInvent 2018, Amazon Web Services, the cloud arm to tech giant Amazon, announced its first generation of in-house-designed Graviton processor. A first for the cloud industry then, many believed it was a gamechanger for the public cloud markets. After all, it was the first time Arm architecture would roll out for enterprise-grade utility, and at such a colossal scale.

To recap, when AWS acquired Annapurna Labs in 2015, it was with a vision of changing how the cloud is delivered with the Nitro system. The AWS Nitro System is basically a virtualization infrastructure that serves as the underlying platform for the next generation of EC2 instances. Amazon EC2 or Elastic Compute Cloud provides scalable computing capacity in the AWS Cloud.

That also means, using Amazon EC2 eliminates a client’s need to invest in hardware up front, so they can develop and deploy applications faster. Ultimately, the goal of AWS Nitro is to accelerate AWS innovation, reduce customer cost, increase security, and deliver new instance types, including bare-metal instances where customers can bring their own hypervisor

Now, Graviton has become an extension of that innovation, driving more choice and extending experiences across the entire infrastructure stack. To put it simply, the Graviton family of Arm-based custom processors was developed by AWS to provide customers with superior high-performance computing in EC2 with reduced costs.

“Performance can be hard to achieve when you refuse to budge on things like security and cost,” AWS Utility Computing senior VP Peter DeSantis said in his keynote session on the first night of reInvent. Throughout his keynote, DeSantis centered his message around the balance of performance, costs and security when designing solutions and services.

That said, at this year’s reInvent, AWS took the stage to introduce its latest chip, dubbed the Graviton3E, thus upgrading the current line of the Graviton family. The latest Graviton3E, according to AWS utility computing senior VP Peter DeSantis, is made with noticeable performance improvements over the standard Graviton3, sustaining over 35% better performance for vector-based workloads.

Frankly, pundits have widely claimed that AWS has continued to play an important role in showcasing the benefits of Arm IP to the cloud computing market, from AWS Nitro to the first introduction of Graviton instances in 2018 to today. In his keynote address at the reInvent, DeSantis shared how the latest chip performed better when used for life sciences and financial modeling, among other things.

He also noted that the Graviton3E chip is optimized for vector and floating-point workloads, which are common in higher-performance computing, especially research involving large-scale data modeling such as finance, weather prediction, life sciences, materials sciences and chemistry. To recall, the launch of Graviton3 provided up to 80% performance improvements over Graviton2 for some applications and even significant boosts for encryption and video encoding.

Peter DeSantis, Senior Vice President of AWS Utility Computing

Source: AWS

What will Graviton3E support? AWS shares at reInvent

According to DeSantis, Graviton3E will be supporting a whole new set of EC2 instances including the upcoming HPC7G instances for high-performance computing workloads with 200 gigabytes of dedicated network bandwidth. It is apparently available in multiple sizes up to 64 virtual CPUs and 128 GiB of memory. It is however important to note that these instances will not be online until sometime next year.

The Graviton3E will also be available for C7gn instances for networking-intensive workloads such as virtual networking appliances — firewalls, virtual routers, load balancers and similar services — data analytics and tightly coupled computing clusters. DeSantis said they are capable of supporting 200gbps of network bandwidth and 200% higher packet performance.

He also explained that both of those new instances would take advantage of the new Nitro 5 hardware hypervisor, which was also announced at the reInvent. The new fifth-generation Nitro card almost doubles compute capacity on board, supports 50% more DRAM bandwidth, 60% higher packets per second, 30% lower latency and 40% better performance per watt, DeSantis presented.

Along with the new Nitro, DeSantis said that C7gn offers 50% better packet processing performance at the lowest latency and the highest throughput. That, according to him, was made possible because the team had doubled the number of transistors on the custom Nitro chips.

READ MORE

- 3 Steps to Successfully Automate Copilot for Microsoft 365 Implementation

- Trustworthy AI – the Promise of Enterprise-Friendly Generative Machine Learning with Dell and NVIDIA

- Strategies for Democratizing GenAI

- The criticality of endpoint management in cybersecurity and operations

- Ethical AI: The renewed importance of safeguarding data and customer privacy in Generative AI applications