Unpacking the surge in cybersecurity issues in 2024. (Source – Shutterstock).

The rising tide of cybersecurity challenges in 2024 – What we’ve seen so far

- Cybersecurity challenges in 2024 will escalate with AI and phishing threats at the forefront, requiring stronger defenses.

- The Defiance Act targets AI-generated nonconsensual images, reflecting urgent cybersecurity law updates.

- Incidents like Lush’s breach underscore the need for improved cybersecurity and legislative response in 2024.

As 2024 unfolds, the digital landscape is witnessing an unprecedented surge in cyberthreats, marked by significant breaches as early as February. The uptick in activities by Russian hackers, targeting key tech companies, including a notable intrusion at Hewlett Packard Enterprise (HPE) linked to a group known for compromising Microsoft email accounts, underscores the severity of the situation.

Similarly, Malaysia is facing an increase in data security breaches, underscoring the pressing need for more robust cybersecurity measures. The urgency of this matter has catalyzed efforts by both governmental and corporate sectors to better enhance cybersecurity laws and practices to counter these emerging threats.

A recent study by Keeper Security, which surveyed over 800 IT security leaders globally, indicates a concerning trend: an overwhelming 95% of participants report that cyberattacks have reached unprecedented levels of sophistication. The study highlights AI-powered attacks and phishing as the most severe and rapidly growing threats.

Evolving cybersecurity challenges in the age of AI and deepfakes

The cybersecurity environment of 2024 is complex and evolving, with AI and deepfake technologies identified as significant new threats. This development necessitates reevaluating security strategies to effectively address current and forthcoming challenges.

The survey pinpointed the top five attack vectors as AI-powered attacks, deepfake technology, supply chain attacks, cloud jacking, and Internet of Things (IoT) attacks, highlighting the diverse nature of threats organizations currently face.

A significant incident involving the misuse of AI and deepfake technology targeted Taylor Swift, prompting legislative action in the US. A bipartisan group of senators introduced the Defiance Act to criminalize the distribution of nonconsensual, sexualized AI-generated images, in response to the widespread dissemination of such content involving Swift on the social media platform X.

The Defiance Act aims to empower victims of digital forgeries to pursue civil penalties against those who create, possess, or distribute these images without consent. The initiative, led by Senators Dick Durbin, Lindsey Graham, Amy Klobuchar, and Josh Hawley, addresses the growing concern over AI-generated sexual content.

The spread of fake, sexually explicit images of Swift has underscored the ease of creating and sharing deepfakes, raising significant concerns about digital consent and privacy. Despite being artificial, the damage caused by these images is real, leading to measures by Swift’s fans and social media platforms to limit their circulation. X, in particular, has taken steps to restrict searches related to Swift in an effort to curb the spread of these harmful images.

Singapore faces similar challenges with deepfake technology, where prominent figures, including the Prime Minister, have been misrepresented in fraudulent content. These incidents have ignited discussions on the necessity for increased vigilance and stronger measures against the misuse of digital identities for scam promotions.

Global IT leaders have identified the fastest-growing attack vectors as phishing, malware, ransomware, password attacks, and Denial of Service (DoS).

The case of Lush’s breach by Akira

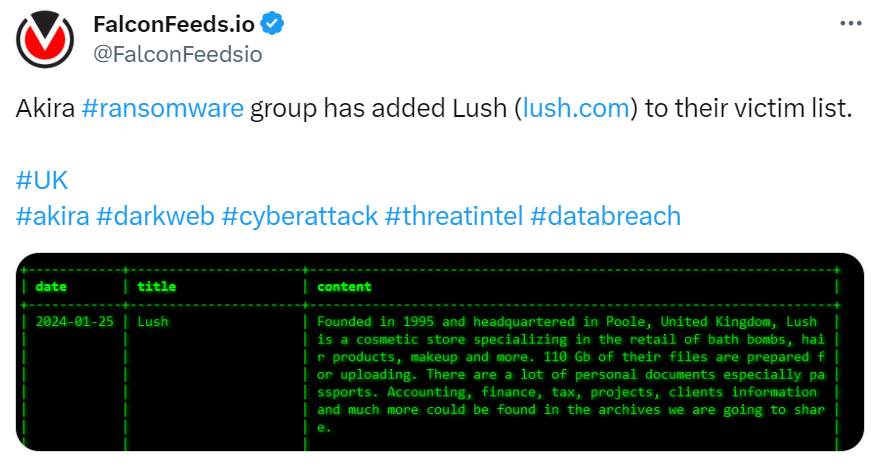

In a notable cybersecurity incident, the UK-based company Lush, known for its cosmetics and bath products, fell victim to a cyberattack claimed by the Akira ransomware group. Lush has engaged IT forensic experts to investigate the breach, initially reported on January 15, 2024. Chester Wisniewski of Sophos highlighted the incident as a ransomware or extortion attack by Akira, which has publicly claimed responsibility for stealing 110GB of data.

Akira’s acknowledgment of the attack on Lush, and the ambiguity regarding the nature of the attack—whether it involved ransomware, extortion, or both—points to the group’s flexibility in their criminal endeavors. The absence of noticeable disruption to Lush’s operations might indicate that encryption was not part of the attack.

Akira ransomware group has added Lush to their victim list. (Source – X).

Wisniewski has observed Akira’s growing prominence in cybercrime, exploiting vulnerabilities, particularly in Cisco VPN products and remote access tools without multi-factor authentication (MFA).

The breach at Lush highlights the importance of promptly updating all externally facing network components and implementing MFA for remote access, underscoring the importance of basic cybersecurity practices.

The importance of being cyber-aware

Moreover, Darren Guccione, CEO and co-founder of Keeper Security, points out the paradox presented by emerging technologies like AI, which, while offering advancements, also pose significant cybersecurity risks if not managed appropriately.

Guccione stresses that with today’s cybersecurity tools, we can address these emerging challenges, turning potential vulnerabilities into opportunities for strengthening our digital defenses.

As the threat landscape evolves, IT leaders must adapt to protect against these emerging threats. Implementing solutions like password managers and privileged access management systems is crucial. These tools enforce robust password protocols and manage access to critical assets, forming a comprehensive security strategy that limits unauthorized access and enhances the resilience of cybersecurity infrastructures.

READ MORE

- 3 Steps to Successfully Automate Copilot for Microsoft 365 Implementation

- Trustworthy AI – the Promise of Enterprise-Friendly Generative Machine Learning with Dell and NVIDIA

- Strategies for Democratizing GenAI

- The criticality of endpoint management in cybersecurity and operations

- Ethical AI: The renewed importance of safeguarding data and customer privacy in Generative AI applications