Google’s AI strategy for elections: Limiting info with Gemini chatbot (Source – Shutterstock)

AI and elections: Google Gemini chatbot limits info; what’s their strategy?

- Google now limits the election-related information provided by its AI chatbot, Gemini.

- Google’s initiatives encompass a broader strategy to combat misinformation through stringent advertising policies and supporting fact-checking collectives ahead of major elections.

- Google and other tech giants face the challenge of balancing innovative technology with the imperative to maintain electoral integrity.

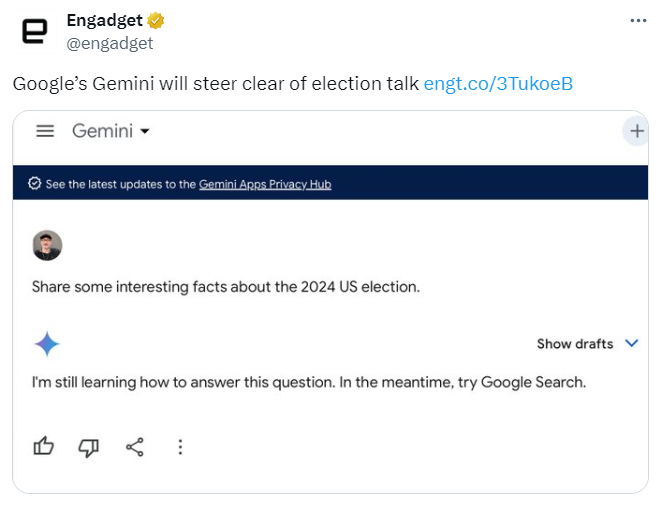

Google has announced limitations on the types of election-related inquiries its AI chatbot, Gemini, can process, particularly in regions undergoing elections this year. This measure aims to curb the dissemination of information about political candidates, parties, and other political matters.

On its website, Google’s India team announced through a blog post that these restrictions are being implemented in India, in anticipation of the elections scheduled to begin in April. This decision is part of Google’s broader effort to mitigate further controversy surrounding AI technology.

Google’s Gemini and AI: A cautious approach to elections

Emphasizing a commitment to the responsible usage of generative AI, Google stated that it is implementing these restrictions as a precautionary measure on a critical issue. The company underlines its dedication to delivering accurate information for such queries and is actively enhancing its safeguards.

The BBC has noted that this initiative aligns with strategies Google disclosed last year regarding its electoral engagement approach. Google explained to the BBC, “As we shared last December, in preparation for the many elections happening around the world in 2024 and out of an abundance of caution, we’re restricting the types of election-related queries for which Gemini will return responses.”

Google Gemini limits itself from answering election questions. (Source – X)

Elections are expected in several countries this year, including the USA, the UK, and South Africa. Google asserts the importance of safeguarding election integrity by preventing the misuse of its products and services. The company has established comprehensive policies to ensure the safety of its platforms and applies these standards uniformly across all content.

Google also highlights its application of AI models and policies, such as those found in YouTube’s Community Guidelines and political content policies for advertisers, to combat misinformation that could jeopardize democratic processes.

The urgency of these actions is fueled by the rapid advancements in generative AI, which have sparked global concerns over misinformation, leading to increased regulatory scrutiny. Recently, India mandated tech companies to seek approval before deploying AI tools deemed “unreliable” or under testing.

Google’s commitment to addressing these concerns was further underscored in February when it issued an apology following the erroneous depiction of a diverse group of US Founding Fathers and World War Two German soldiers by its AI image generator, reflecting the company’s ongoing efforts to navigate the complexities of generative AI responsibly.

Evaluating AI chatbots: Accuracy in election information

A recent Proof News article critically evaluates the precision and trustworthiness of leading AI chatbots, such as OpenAI’s GPT-4, in providing accurate election information. The investigation was spurred by instances where AI models provided incorrect responses to straightforward, verifiable inquiries about election regulations, like the prohibition against wearing campaign-related clothing at polling sites in certain states, including Texas.

Despite explicit rules, AI models like GPT-4 inaccurately suggested that wearing a MAGA hat to vote in Texas was permitted. This issue was prevalent across all five AI models tested, including Anthropic’s Claude, Google’s Gemini, Meta’s Llama 2, and Mistral’s Mixtral.

The AI Democracy Projects, in partnership with over 40 election officials and AI specialists, evaluated these models on bias, accuracy, completeness, and harmfulness for 26 potential voter queries. The outcomes were concerning, with approximately half of the model responses being inaccurate and over a third deemed incomplete or harmful. Although GPT-4 exhibited slightly better accuracy than its counterparts, the overall findings underscored a significant issue with AI’s ability to provide dependable election information.

Errors ranged from complete fabrications, like Meta’s Llama 2 claiming Californians could vote via text message, to misleading information on complex issues, such as allegations of voter fraud in Georgia. These errors underscore the struggles of AI chatbots to manage nuanced and crucial election information accurately.

Meta’s Llama 2 spreading false information (Source – Shutterstock)

The study casts doubts on the preparedness of AI chatbots to distribute nuanced information accurately, especially in critical settings like elections. It questions the adherence of AI companies to their commitments on information integrity and misinformation mitigation. Despite OpenAI’s pledge to guide users towards legitimate election information sources, the study found instances where GPT-4 failed to comply, with some responses even misrepresenting voting processes.

Companies’ reactions to these findings acknowledge the inherent challenges in ensuring the accuracy of AI-generated content, particularly through APIs widely used by developers. While some companies, like Anthropic, have initiated steps to direct users to trustworthy sources, the effectiveness of such measures, especially when accessed via APIs, remains uncertain.

This examination marks a pivotal moment in the evolution of AI technology and its role in disseminating sensitive information like election details. It underscores the need for continuous improvements in AI models, clear and responsible usage guidelines for developers, and safeguards against misinformation, which are essential for the responsible integration of AI technologies into society.

Looking forward: AI’s role in future elections

Google elaborated on its commitment to election transparency, highlighting strict policies for election-related advertising on its platforms. Advertisers wishing to run election ads must undergo identity verification, obtain pre-certification where necessary, and ensure ads disclose their funding sources transparently.

In anticipation of the General Election, Google supports Shakti, the India Election Fact-Checking Collective, comprising news publishers and fact-checkers united to combat online misinformation, including deepfakes. This initiative aims to foster the early detection of misinformation and establish a shared repository to address misinformation challenges at scale.

READ MORE

- 3 Steps to Successfully Automate Copilot for Microsoft 365 Implementation

- Trustworthy AI – the Promise of Enterprise-Friendly Generative Machine Learning with Dell and NVIDIA

- Strategies for Democratizing GenAI

- The criticality of endpoint management in cybersecurity and operations

- Ethical AI: The renewed importance of safeguarding data and customer privacy in Generative AI applications